IMU Thermal Calibration

Improve your IMU's accuracy by calibrating for temperature changes.

Introduction

IMU Overview

IMUs (Inertial Measurement Units) are remarkable sensors that measure the movement and orientation of the devices they are embedded in. Since the rise of smartphones, their size and cost have been drastically reduced, enabling a wide range of applications in robotics and drones, where they enable autonomous navigation and guidance.

IMUs combine a gyroscope (measuring angular velocity in °/s or rad/s) and an accelerometer (measuring linear acceleration in m/s² or g). Gyroscopes measure rotation but can drift over time, while accelerometers sense velocity changes and tilt but cannot distinguish between motion and gravity. Sensor fusion algorithms correct gyroscope drift with accelerometer data and stabilize accelerometer noise with gyroscope input, ensuring accurate motion tracking.

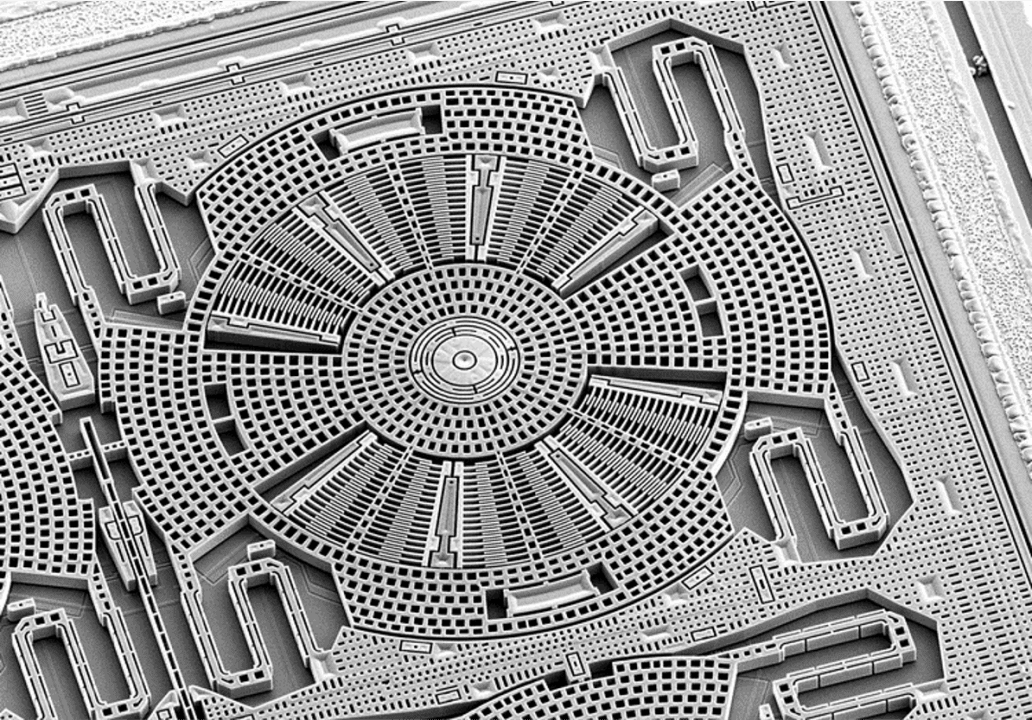

At the heart of an IMU is a Micro-Electro-Mechanical System (MEMS): a tiny structure, often just a few microns in size (about 1/100th the width of a human hair), that moves slightly in response to forces. These movements generate changes in voltage, which are measured by the sensor, converted into numerical data, and transmitted to your system through communication protocols such as SPI or I²C.

For everyday devices like smartphones or tablets, precision isn't as important, so IMUs are not necessary factory-calibrated for temperature. But for drones and robots used in outdoor conditions, accuracy matters and calibrating the IMU for temperature can greatly improve its performance.

Calibration Purpose

Thermal calibration involves placing the IMU-equipped Printed Circuit Board Assembly (PCBA) in a climate chamber for several hours while varying the temperature. With the IMU kept flat and motionless, any changes in its measurements are attributed to temperature effects. The resulting data is used to calculate and save calibration parameters specific to each board. During operation, these parameters are applied in real time to compensate for temperature-induced measurement variations.

Most Electronic Manufacturing Service (EMS) providers have climate chambers for tasks like stress testing, so reserving one for your boards shouldn't be an issue. For lab use, you can purchase a small climate chamber for under $5,000, suitable for testing a few boards. The cost largely depends on the chamber's temperature range. For instance, testing a drone designed for outdoor use may require a chamber that operates between -20°C and 70°C to simulate extreme environmental conditions.

Note that the temperature measured by the IMU will always be higher than the chamber's temperature due to the internal heat generated by the IMU's casing and the PCBA it is mounted on.

Equipment & Setup

To implement thermal calibration for the IMU in a drone, you will need the following:

- A climate chamber capable of reaching temperatures between -20°C and 70°C.

- A support structure to hold the PCBA in the chamber and a power supply.

- A Device Under Test (DUT), equipped with the IMU that requires calibration.

- Firmware for the device, with a triggerable mode to log the raw IMU data.

- A Python test script, built with OpenHTF, to:

- Retrieve data from each board after the calibration process.

- Calculate the calibration parameters.

- Verify the quality of the calibration and ensure there are no defects.

- Save the calibration parameters to the product.

- A database and analytics platform, like TofuPilot, which will store these calibration data for traceability and monitoring purposes.

Hardware Components

Climate Chamber

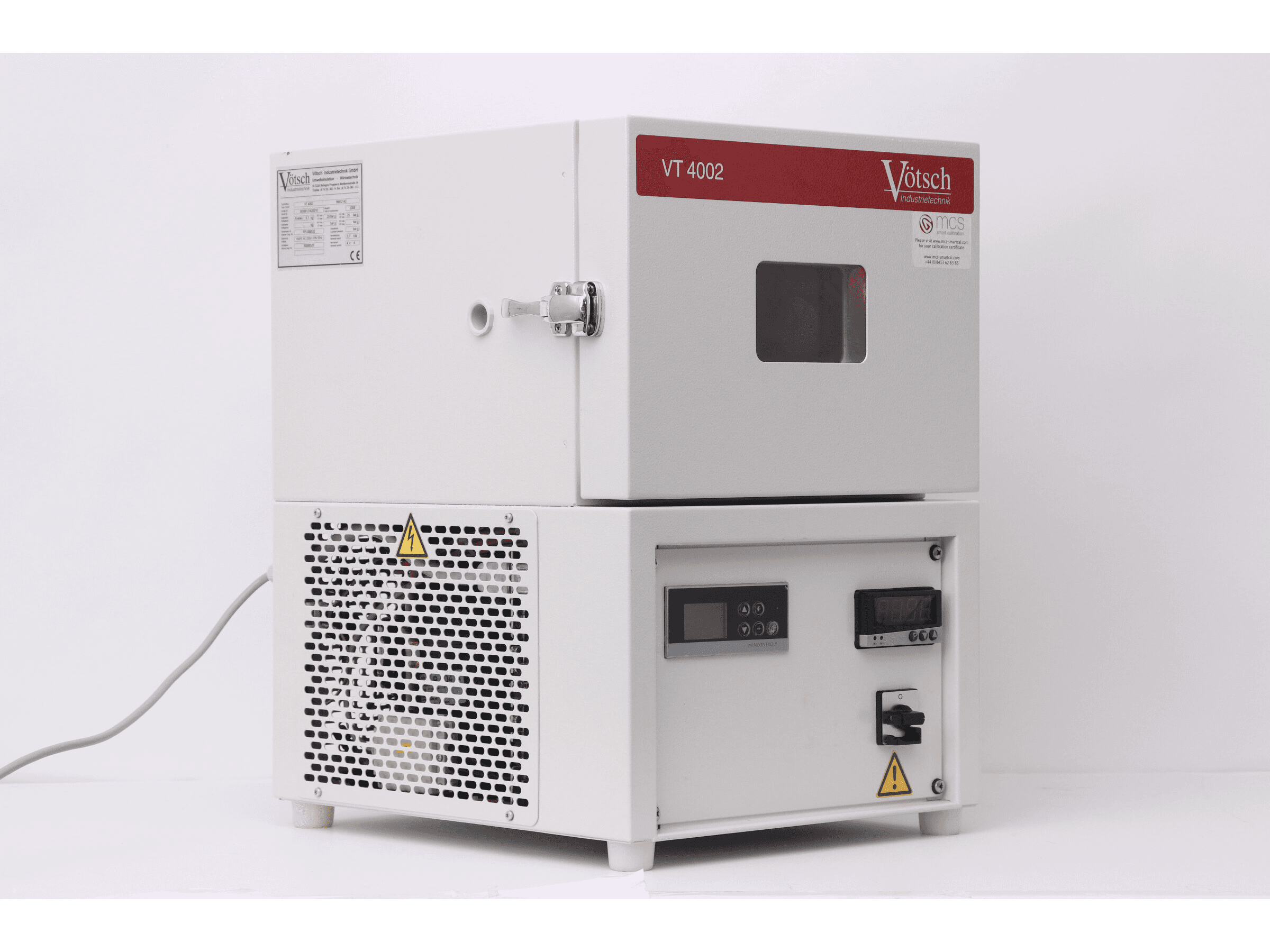

We will use the Vötsch VT4002 temperature test chamber, which has a temperature range of -40°C to +130°C. With its 16-liter volume, it is ideal for designing the test in the lab or for small production runs.

Cycle Program

The IMU's response is not necessarily the same when it heats up versus when it cools down. To ensure the calibration accurately reflects the sensor's real thermal behavior, we will set a calibration duration of 2 hours, with 4 temperature cycles ranging from -20°C to 70°C.

Support Structure

For the lab setup, we'll create a simple 3D-printed support using ESD filament. Foam will be added to reduce vibrations from the climate chamber. While these vibrations are likely filtered out during data processing, minimising them is still beneficial.

In mass production, we can collaborate with the test team at our EMS to design a support that accommodates multiple boards to optimise throughput, provides power to them, and is adapted to the dimensions of their temperature test chambers.

Custom Firmware

Throughout the entire calibration process, the IMU needs to log its measurements at a frequency of at least 10 Hz. To enable this, we will develop a special logging mode in the firmware. When activated, this mode will start data acquisition and record the following parameters:

- Timestamp: To track when each measurement was taken.

- Gyroscope data: X, Y, and Z axes in degrees per second (deg/s).

- Accelerometer data: X, Y, and Z axes in meters per second squared (m/s²).

- Internal sensor temperature: This will be used as the reference for calibration.

The logged data can be stored as a JSON or CSV file in the PCBA memory or on an SD card. Most climate chambers have an external pin that activates at program start. Connecting this pin to a stabilized power supply outside the chamber allows the supply to switch on automatically at the program's start and off at its end, ensuring logging occurs only during the thermal cycle and not afterward, such as when the operator retrieves the board.

Test Script

Overview

Once the thermal cycle is complete, the chamber powers off and the log file is ready for retrieval and processing. Operators will remove the PCBAs from their calibration support and connect them to the test station. At this point, the script takes over to:

- Connect to the Device Under Test (DUT) and retrieve the acquisition file.

- Validate the acquired data.

- Process the calibration data.

- Validate the calibration parameters.

- Save calibration results to the DUT internal memory.

- Provide a global pass/fail status.

- Log results to the test database TofuPilot for traceability and analytics.

Execution Framework

For our test script development, Python is a great choice because it's simple, fast to develop with, and comes with powerful libraries for data analysis and visualization.

On top of Python, we'll use the OpenHTF library to manage multiple test phases, log numeric measurements and attachments, and provide a repeatable way to write tests and record results.

Main Script

Using OpenHTF, we define a test script with two phases: one to retrieve the acquisition file from the DUT and apply validation measurements on the raw data, and another to compute thermal calibration parameters and apply validation measurements on the calibration output.

Utility functions are separated in a dedicated utils folder, and interactions with external devices, like the DUT, are handled through plugs, which are automatically destroyed at the end of the test by their tearDown method.

Loading...Key Functions

Raw Data Retrieval

The script starts by retrieving raw data logged by the IMU beforehand. A method is created in an OpenHTF plug to simulate the DUT driver and retrieve raw data from its internal memory or SD card. For this example, it's a simple mock function that fetches a CSV file stored in the data folder.

Loading...Raw Data Validation

The raw measurements recorded during calibration are critical for defining the calibration parameters that will significantly impact the product's future performance. However, these measurements can be affected by testing conditions or faulty sensors. To prevent such issues, we validate the data by implementing measurements on the noise density and temperature sensitivity.

Noise Density

Sensor measurements will inevitably include noise caused by vibrations from the chamber, the support, or the sensor itself. This is not an issue, as the calibration algorithm filters out this noise. However, excessive noise could compromise calibration quality, such as when the support is poorly fixed, the chamber vibrates excessively, or a sensor is inherently too noisy. To address this, we calculate the noise density using a utility function and validate it against thresholds using OpenHTF measurements validation mechanisms.

The datasheet specifies initial noise density limits, which can later be refined using actual production data by computing the mean ± 3σ values. Connecting your test scripts to TofuPilot streamlines this process, as all measurements are logged, and control charts automatically calculate the 3σ limits.

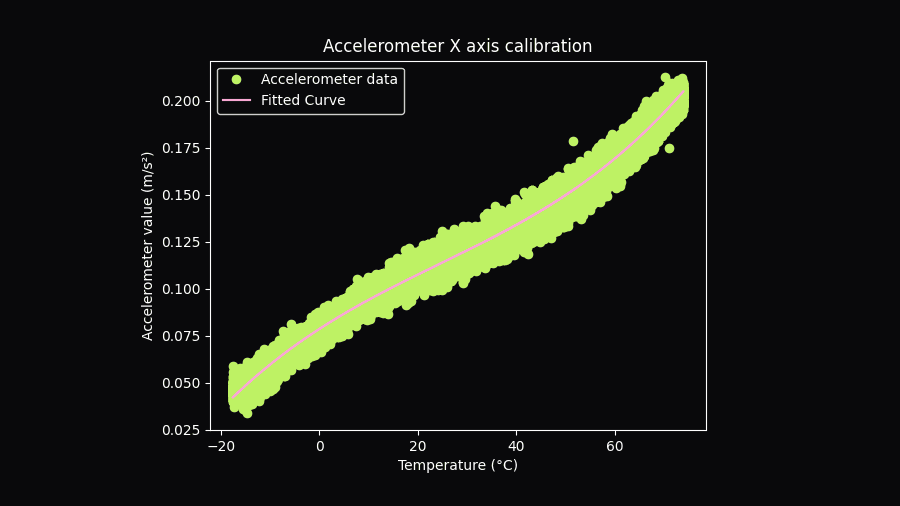

Loading...Temperature Sensitivity

If the goal of the entire process is to characterize the thermal sensitivity of sensors for accurate compensation models, estimating it beforehand is valuable to identify abnormal values. Low sensitivity may indicate that the climate chamber was not launched or that temperature variation was insufficient, while excessively high sensitivity could signal sensor defects.

We compute the temperature sensitivity across the entire thermal range and apply validations on both the maximum sensitivity measured and the sensitivity at 25°C. Initial limits are derived from the sensor datasheet and are refined over time using production data, using ± 3σ values computed by TofuPilot.

Loading...Polynomial Calibration

With raw data validated, we can proceed with the calibration process. At its core is a function that uses a 3rd-order polynomial fit to model the relationship between sensor response and temperature, generating three coefficients. These coefficients replace the need for large lookup tables and are programmed into the device, enabling real-time correction of measurements during operation.

Loading...Calibration Validation

With the calibration parameters computed, the next step is to validate their quality by ensuring the sensor model accurately represents the sensor's response.

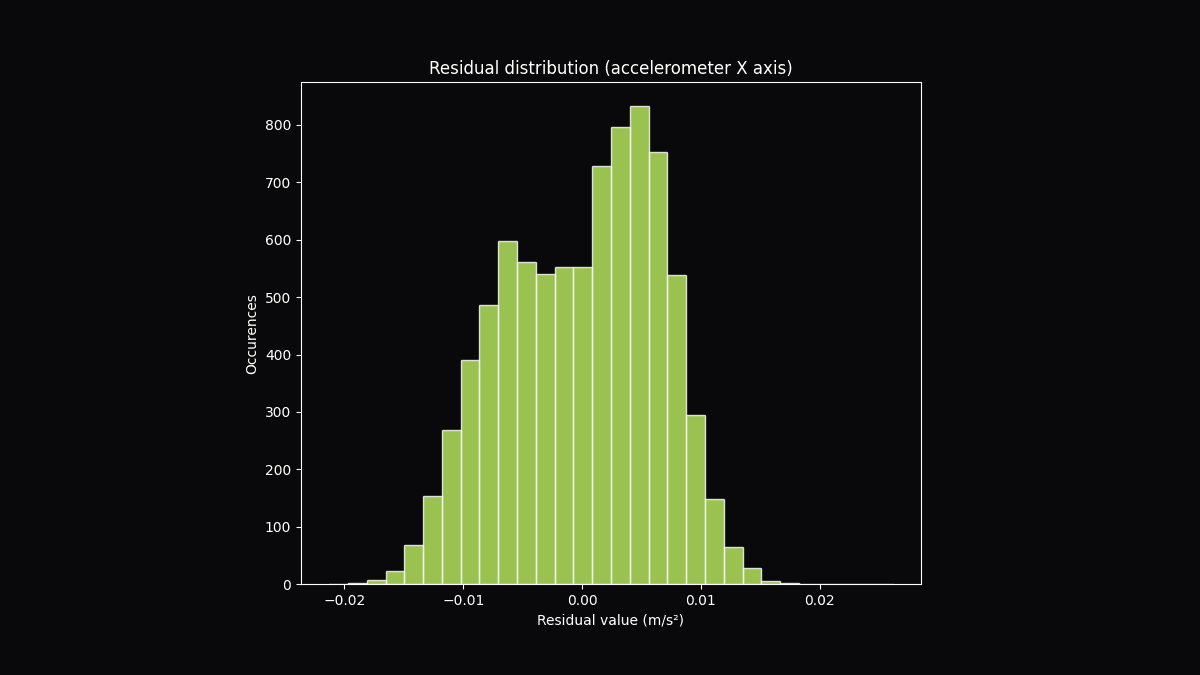

Residuals

To do this, we compute residuals—the differences between the model's predictions and actual measurements—and assess their characteristics. First, we compute the residual mean to identify biases, such as consistent overestimation or underestimation. Next, we compute the residual standard deviation to measure variability across the temperature range, revealing areas where the model may fit poorly. Finally, we compute the residual peak-to-peak values to assess the overall range of errors. These validations ensure the calibration is reliable and flag DUTs needing further review or rejection.

Loading...Coefficient of Determination (R²)

Residual metrics focus on local calibration accuracy, but the coefficient of determination (R²) evaluates how well the model represents the sensor's behavior globally. A high R² (close to 1) indicates the model explains most of the variance, while a low or negative R² reveals poor calibration.

Loading...Database & Analytics

Sensor calibration, while complex, can be implemented quickly using tools like Python and OpenHTF. This process can be developed during the validation or development phase of a new product and deployed in production when mass manufacturing begins. The quality of a test relies not only on the performance of processing algorithms and the expertise of the team but also heavily on the choice of metrics used to validate the measurements. These metrics improve as more units are tested, allowing for more precise 3σ limits to be defined.

To achieve this, it is essential to have a database in place to log test results and an analytics solution from the outset. This enables tracking of tested units, identifying failure causes, and refining validation limits for all units. We developed TofuPilot specifically to address these needs.

OpenHTF Integration

Integrating TofuPilot with OpenHTF is straightforward, requiring only a single line of code. This allows you to log test results automatically, enabling real-time tracking, analytics, and the refinement of validation metrics without disrupting your existing workflow.

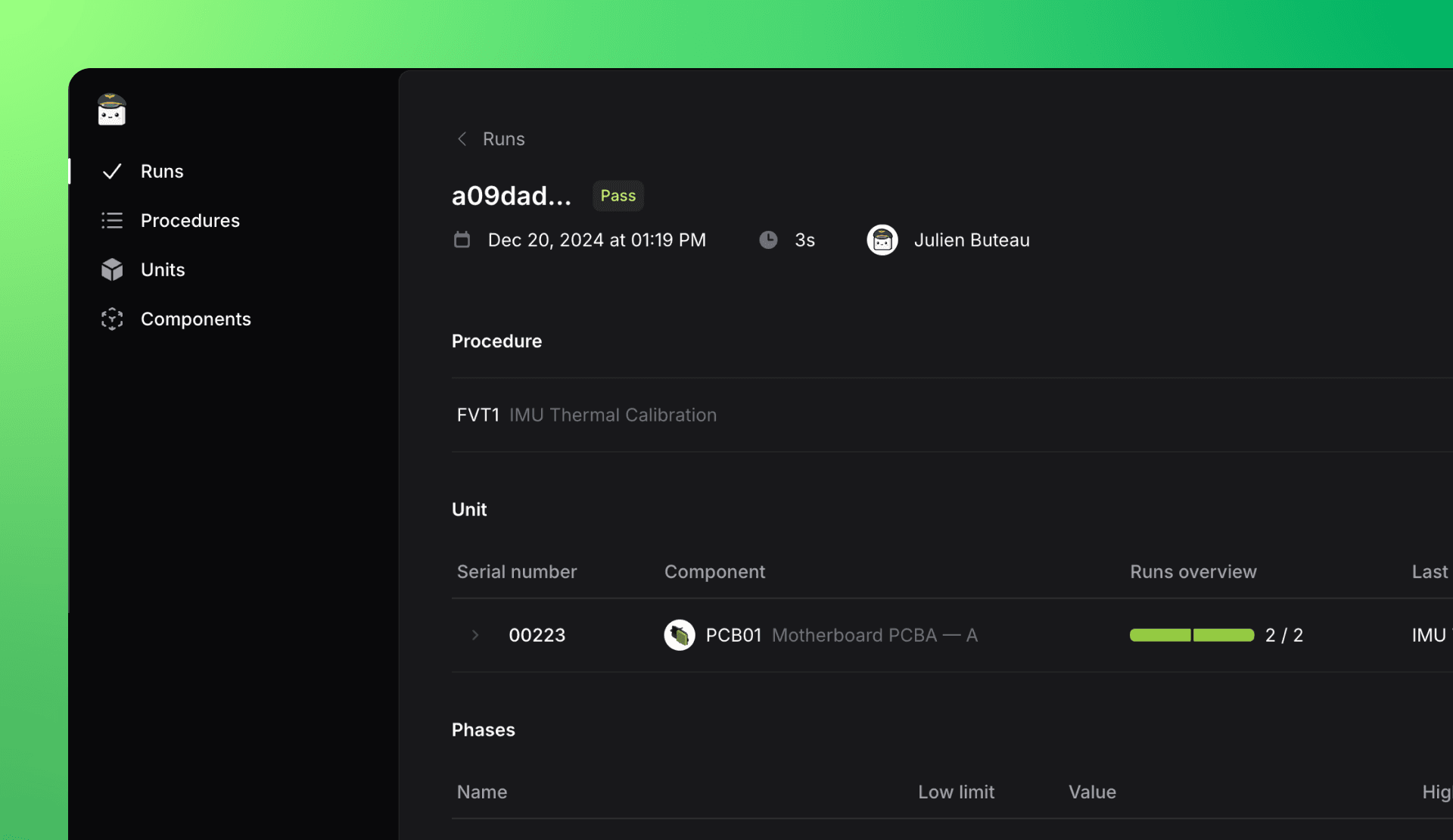

Run Page

After a run has completed, a dedicated page is automatically created in your secure TofuPilot workspace. This page displays the test metadata, such as the serial number, run date, and procedure reference. It also provides the list of the run phases and measurements, along with their limits, units, duration and status. Additionally, any attachments generated during the test are automatically uploaded, making them easily accessible directly from the workspace.

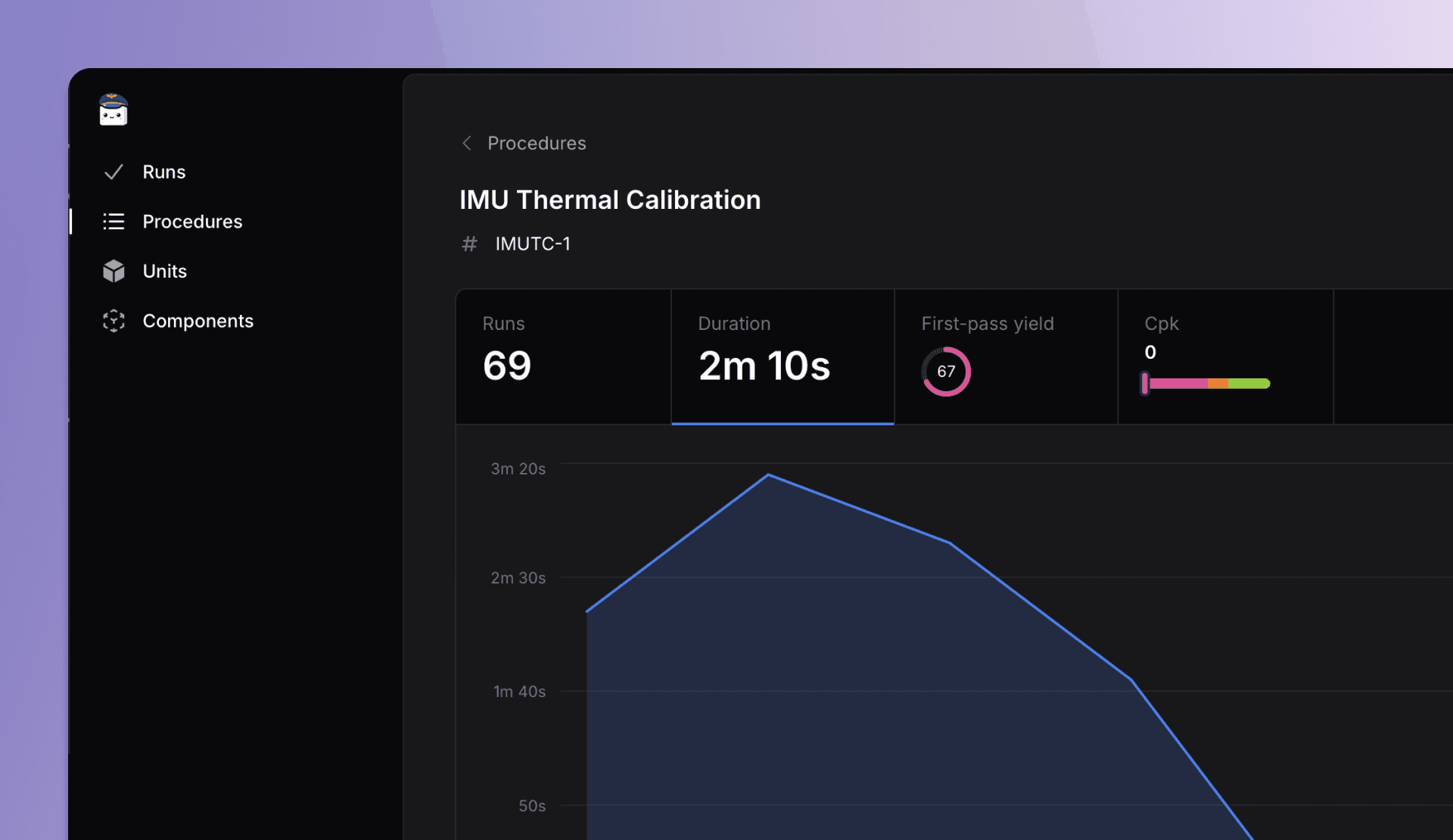

Procedure Analytics

Analyzing the performance of a procedure across recent runs is straightforward with the procedure analytics page. Key metrics such as the run count, average test time, first pass yield, and CPK are calculated automatically. You can filter the data by date, revision, or batch to narrow down your analysis. The page also provides a detailed breakdown of performance by phase and measurement, allowing you to select a specific phase or measurement to analyze its duration, first pass yield, or CPK individually. Additionally, you can view its control chart to track recent measurements, observe trends, and determine 3σ values.

Unit Traceability

Finally, the traceability of each tested unit is easily accessible through its dedicated page. This page provides the complete history of tests performed for the unit, any related sub-units, and a link to the page dedicated to the revision of the part.

Get Started with TofuPilot

Trying out this template—or connecting your own test scripts to TofuPilot—is incredibly straightforward. You can create a free account and upload your test data in just a few minutes.

With TofuPilot, you'll save months compared to building your own test analytics, while developing and improving your tests faster.